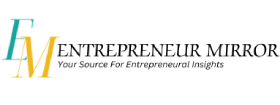

Thanks to Microsoft’s latest artificial intelligence technology, the Mona Lisa can more than just smile.

Microsoft researchers unveiled a new artificial intelligence (AI) model last week that can automatically produce a realistic-looking video of a person speaking from a still photograph of their face and an audio clip of them talking. The videos feature captivating lip-syncing and realistic head and face motions. They can be created using photorealistic faces, cartoons, or artwork. In one demo film, researchers demonstrated how they animated the Mona Lisa to recite an amusing rap by actress Anne Hathaway.

Researchers say Microsoft’s new AI model was trained to identify various natural facial and head motions, such as “lip motion, (non-lip) expression, eye gaze, and blinking, among others,” using many films of people speaking. When VASA-1 animates a still shot, the outcome is a more realistic video.

For instance, the sample video features footage of a person who appears to be playing video games, with furrowed brows and pursed lips.

Additionally, the AI tool can be programmed to generate a video in which the person is showing a particular mood or looking for a specific direction.

Also Read:

Abu Dhabi-London flights are once Again Provided by British Airways Having a Four-Year Break.

Focusing on Growth and Digital Adoption, a Family Business in Dubai