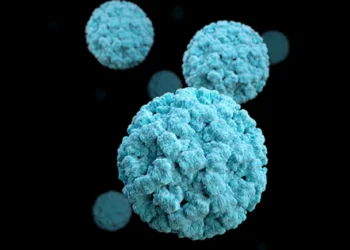

Campaigners caution that OpenAI’s new ChatGPT feature in the US poses privacy concerns because it can analyze people’s medical records to provide them with better responses. The company wants users to provide data from applications like MyFitnessPal and their medical records, which will be analyzed to provide tailored recommendations.

OpenAI clarified that ChatGPT Health was not intended for “diagnosis or treatment” and stated that talks would be retained independently of other chats and would not be used to train its AI technologies.

According to Andrew Crawford of the US nonprofit Center for Democracy and Technology, it is “crucial” to keep “airtight” security measures in place for users’ health information.

Health data is among the most sensitive information people can disclose, so it must be protected, even as new AI health technologies promise to empower patients and improve health outcomes, according to Crawford. According to him, AI companies are “leaning hard” to figure out how to make their services more personalized in order to increase value.

Especially as OpenAI moves toward investigating advertising as a business model, it’s crucial that the gap between this sort of health data and memories that ChatGPT captures from other discussions is airtight,” he stated.

Also Read:

King’s Christmas Message Calls for Harmony in a Divided Society