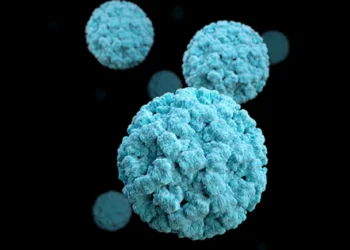

According to researchers, despite the widespread usage of artificial intelligence (AI) in the changing economic landscape, businesses are still ignorant of the difficulties it presents. Nowadays, “AI data/model poisoning,” which occurs when malevolent actors intentionally alter training data for an AI or machine learning model, has become a significant problem.

The predictive or narrow AI solutions (task-focused AI systems) in the MLOps cycle (deploying machine learning models) are mostly exploited by this assault. This poisoning is not present at the model levels in generative AI solutions, but rather in RAG (answers based on recovered information) and knowledge graphs (linked data for understanding). The effect on agentic AI is the most worrisome, as AI poisoning not only affects autonomous behaviour but also corrupts output.

Consider the use of AI in predictive maintenance by an oil and gas industry, for example. Here, the AI may fail to recognise real warning indications or even mistakenly indicate healthy equipment if a malevolent operator quietly inserts modified sensor readings into its training data. Unexpected shutdowns, expensive repairs, and possible safety risks might result from this.

Similarly, in the financial industry, tainted stock data in the knowledge graph of an investing agent at a bank may lead the agent’s AI to make poor investment decisions, resulting in significant losses for clients. “AI poisoning compromises the accuracy of AI-driven judgements, leaving businesses open to financial losses and operational interruptions.

Also Read:

Ajman Bank Reports a 24% Increase in Q1 2025 Profit Before Taxes of Dh145 Million

Iran And the US Plan to Meet Next Week after Ending their Nuclear Negotiations in Rome